We’ve previously published an executive introduction to Istio service mesh, a technical deep dive, and a comparative analysis of the platform. In this final instalment of our Istio series we explore how it benefits canary release and deployment.

Canary Release and Deployment

Traditional big-bang software releases are stressful for all teams including developers, quality assurance, platform, and production support. Canary release and deployment overcomes this. It improves quality, confidence, and overall business value to the customer when releasing new features.

Figure 1 outlines the canary release and deployment process workflow using Istio. Key aspects that need to be considered include:

- Immutability of artifacts

- Versioned artifacts

- Manage artifacts in repository

- Unified consistent build pipeline for different personas

- Unified consistent environment pipeline

- Infrastructure defined as versioned code in repository.

The release process starts with the Open Release action from the Release Manager. This action opens the new release branch named with the next version in sequence based on semver. Developers continue their work and push to the relevant release branch in the repository. On completion of the set timeline, the release branch is closed by the Release Manager to include all the required code supporting the business value. When the release plan is deployed, related artifacts are pulled from the artifacts repository that constitutes the release.

Figure 2 shows the artifacts generated throughout the release process, indicating how each is stored, used, and deployed to the cluster to mark the completion of a new release.

Canary Deployment Solutions

Canary deployment can be implemented in different ways, according to business requirements:

- Blue/Green deployment

- Canary deployment at the namespace or cluster level

- Canary by environment/cluster (re)build (no build, partial rebuild, full rebuild).

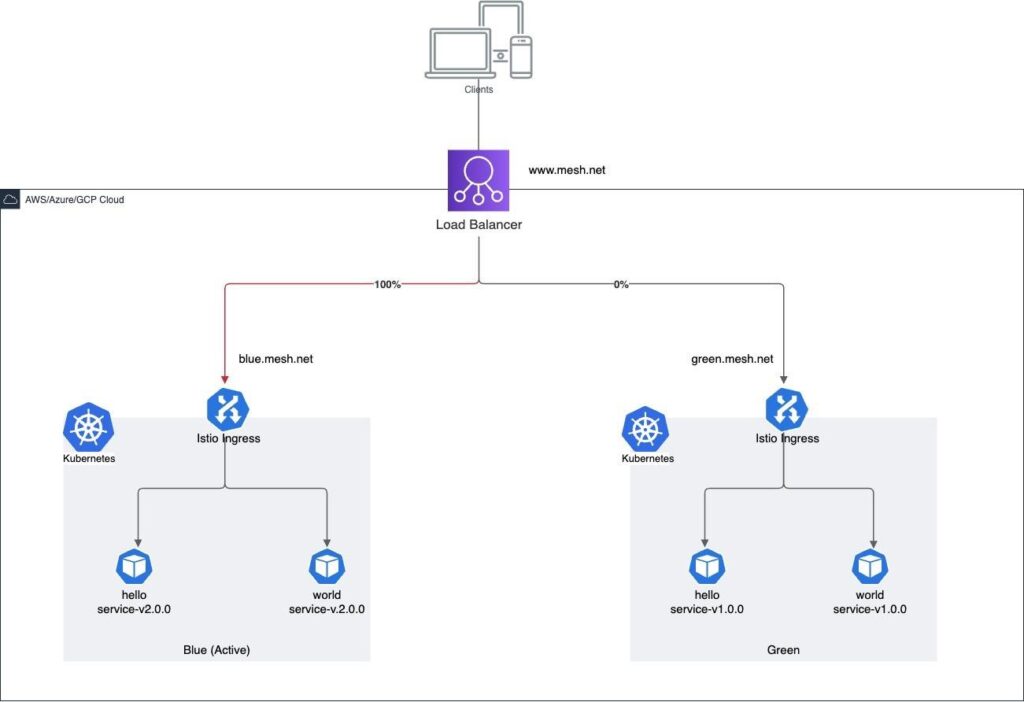

Blue/Green Deployment

With a blue/green approach, two parallel clusters must be maintained (see Figure 3). One cluster operates on the current version and the other operates on the next version. Another way to describe the clusters is ‘active/passive’. On successful deployment of the passive cluster with the new release, roles switch and the new cluster (passive) performs the role of the active cluster which serves the full 100% load. The impact of this switch is minimal, with downtime ranging from seconds to minutes.

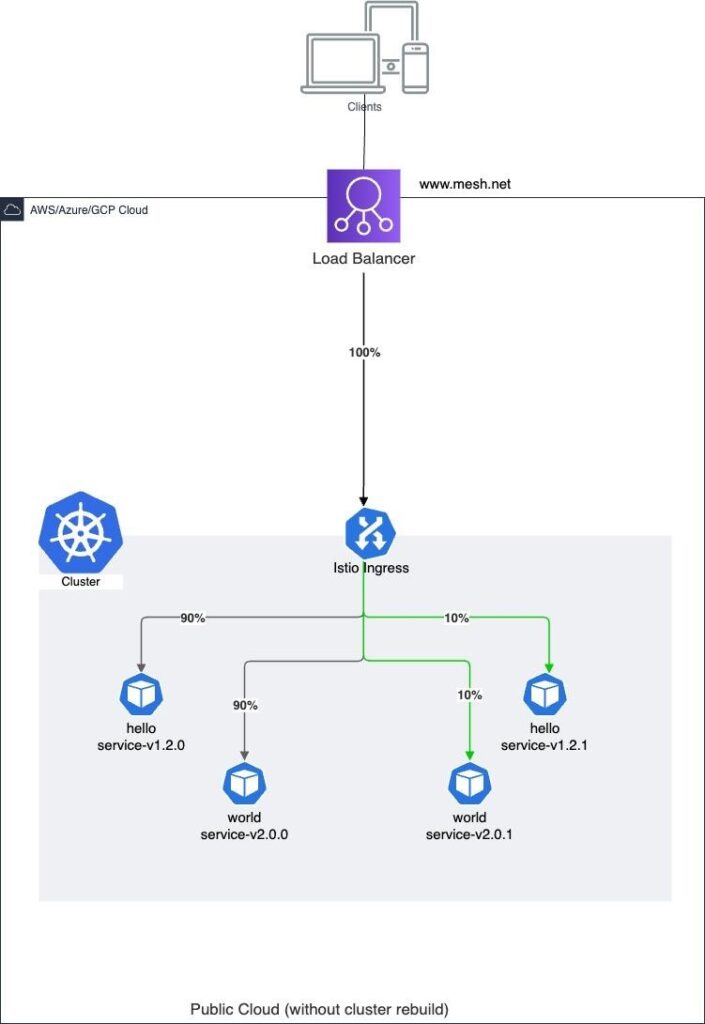

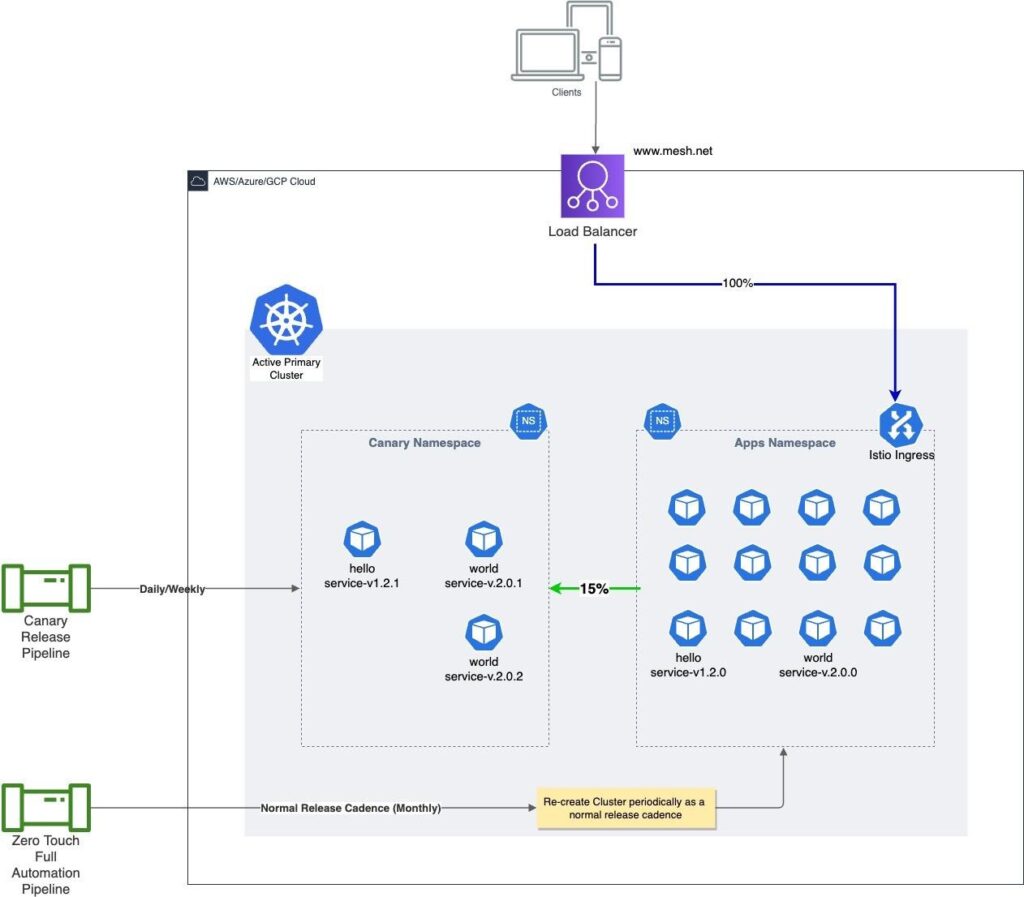

Single Active Cluster with Canary Release Plan Deployment

When using the single active cluster approach, the cluster includes additional versions of the services coexisting in parallel (see Figure 4). The service mesh configuration plays an important role in diverting a limited percentage of the traffic dynamically to newer versions of the services, allowing request loads to be shifted to newer versions gradually when feedback of expected behaviour is received. Over time, if the new features/versions function without failure and as expected, 100% of the traffic is shifted, making the older version passive but still available in the environment.

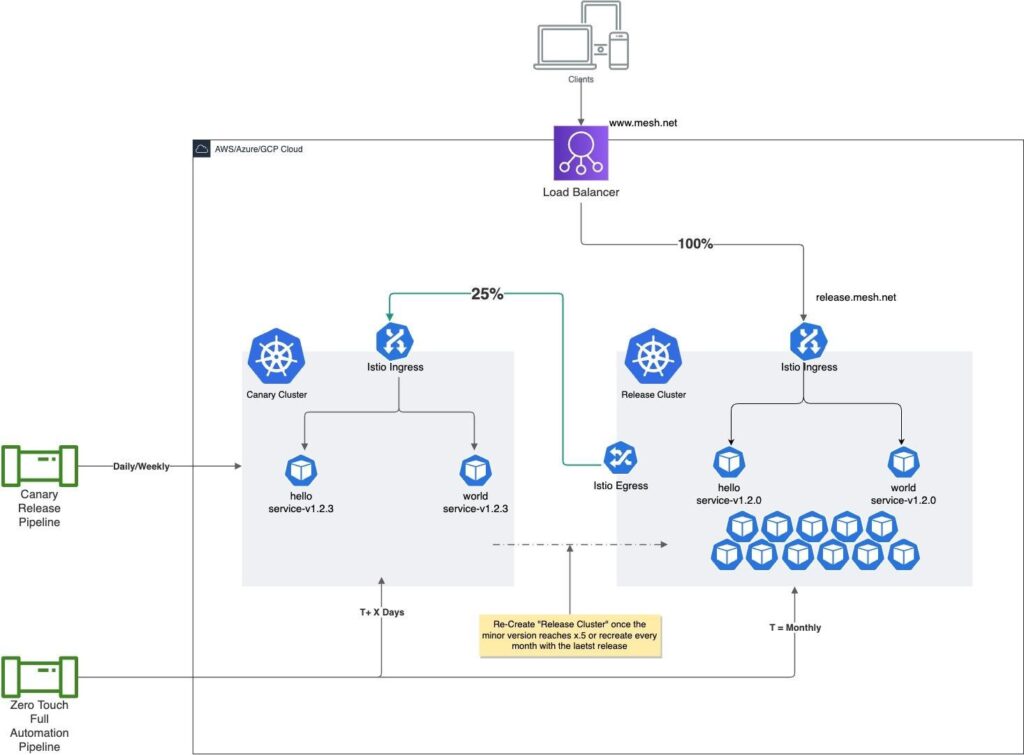

Parallel Cluster with Only Canary Plan Deployment

An alternative is the parallel cluster approach (see Figure 5). Here, another cluster can be maintained; not with full deployment, but with a partial or delta of the services deployed on the full cluster.

The delta cluster can be considered the canary cluster, where all new versions of applications are deployed, while the stable working version (previous version) from the delta cluster is moved to the full cluster, replacing and removing older versions. At any time, the full cluster will have version N of the application, while the delta cluster will have N+1. The mechanism in the parallel cluster approach runs a full release plan on a full cluster while the canary release pipeline is used to deploy only the intended single applications one at a time. Canary weight or size should be driven by advanced traffic-rules configuration at the Egress Gateway of a full cluster.

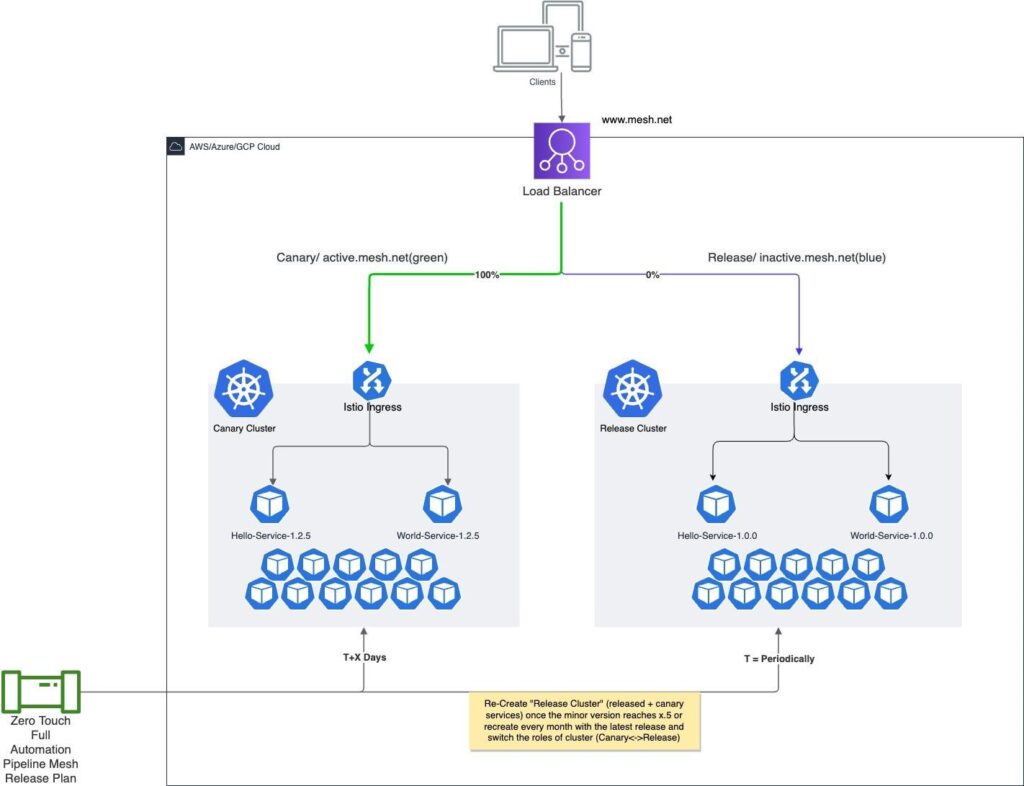

Parallel Cluster with Full Plus Canary Release Plan

With this approach, a new parallel cluster is rebuilt from the release plan of the new release, deploying only the delta to the full active cluster. Instead of reusing the canary cluster and deploying a new release version of a single application, a previous canary cluster is discarded and a new cluster with all the applications that have a new release version are deployed together as a single pipeline. Figure 6 visualises the process.

Single Cluster With Parallel Canary Release Namespace

Similarly, a separate namespace can be created for the purpose of deploying canary versions of the applications (see Figure 7). A full release plan is executed in a release namespace and incremental canary releases are deployed on the ‘canary’ namespace, which overrides any existing objects.

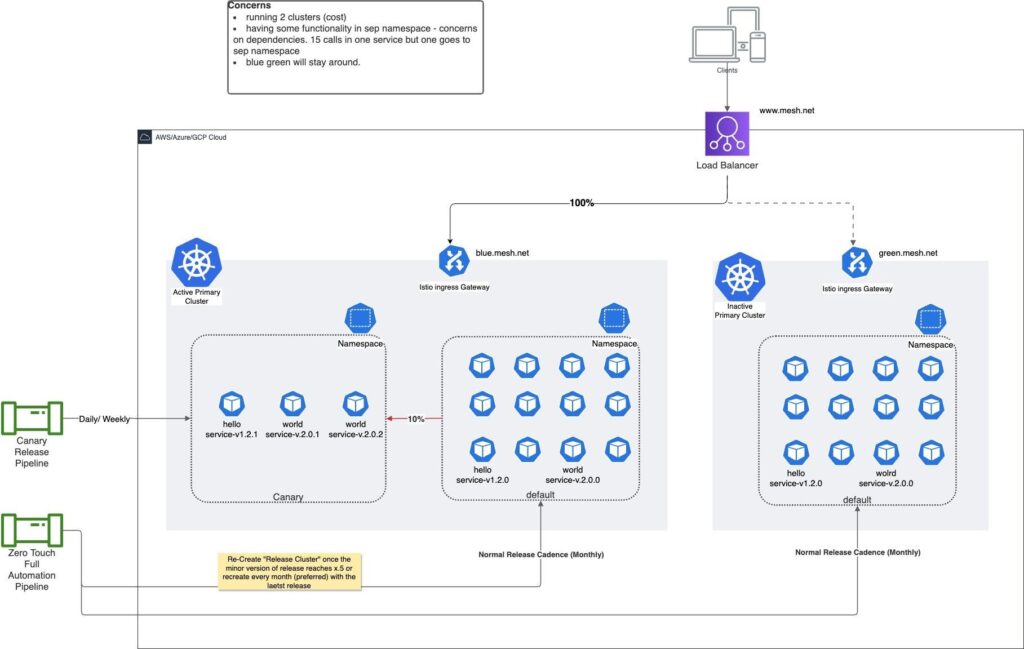

Parallel Cluster with Parallel Canary and Release Namespaces

Active-passive clusters are maintained in this approach (see Figure 8). Initially, both clusters are built using the latest release plan. When a new release is opened for development, the active cluster has a new namespace, ‘canary’, created. All canary releases will be deployed into that namespace until the release is closed. When a release is closed, the passive cluster is rebuilt with that latest release and takes the role of active cluster. After a predetermined time (a day or two), a passive cluster is rebuilt with the latest release plan, making both active-passive clusters equal. The same process is repeated every time a new release is opened.

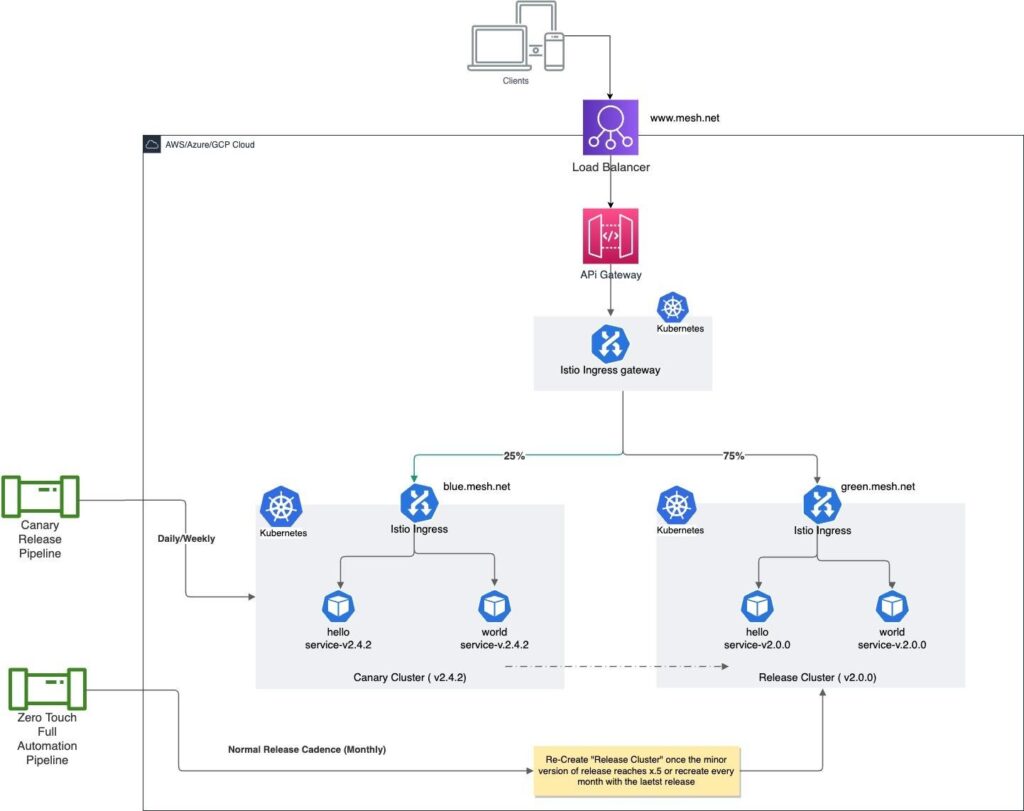

Parallel Active Clusters (Canary and Release) Routed at Gateway Level

Figure 9 illustrates the parallel active clusters approach, a unique gateway-active-active formation of parallel clusters. One is a release cluster and the other is a canary cluster. After the release is opened, all the releases of individual microservices are deployed as canaries into a canary cluster. On closing the release, a release cluster is rebuilt with the most up-to-date plan. The intelligence of routing is applied at the Gateway cluster level. By way of smart routing definition, it then routes traffic to a newer version of the microservice deployed in the canary cluster.

Flexible design enables service mesh to be implemented and deployed effectively in a variety of architectures. Adaptability is a key benefit, enabling wider applicability and significant increases in productivity.

We have extensive experience implementing advanced service mesh solutions tailored to the needs of specific teams or organisations. To learn more about service mesh, refer to the earlier articles in our series:

- Why Istio Service Mesh Could Relieve Your Cloud Modernisation Headaches

- A Technical Deep Dive into Istio Service Mesh

- An Analysis of Istio Service Mesh

Or contact us if you’d like support in your journey with Istio service mesh installation.

Kamesh is seasoned technology professional focused on solving real world problems of enterprises using modern, innovative architecture having high emphasis on zero-trust security. He has led some of the largest digital transformation projects end-to-end from solution to delivery. He keeps a high focus on customer orientation, security, innovation & quality.